“Facts and their properties” has occupied my thinking for much of the past year. I suggested earlier that the current term *fact* needs to be supplemented by terms which express the idea that many matters which were once considered as factual have lost their credentials and have since been dishonourably discharged.

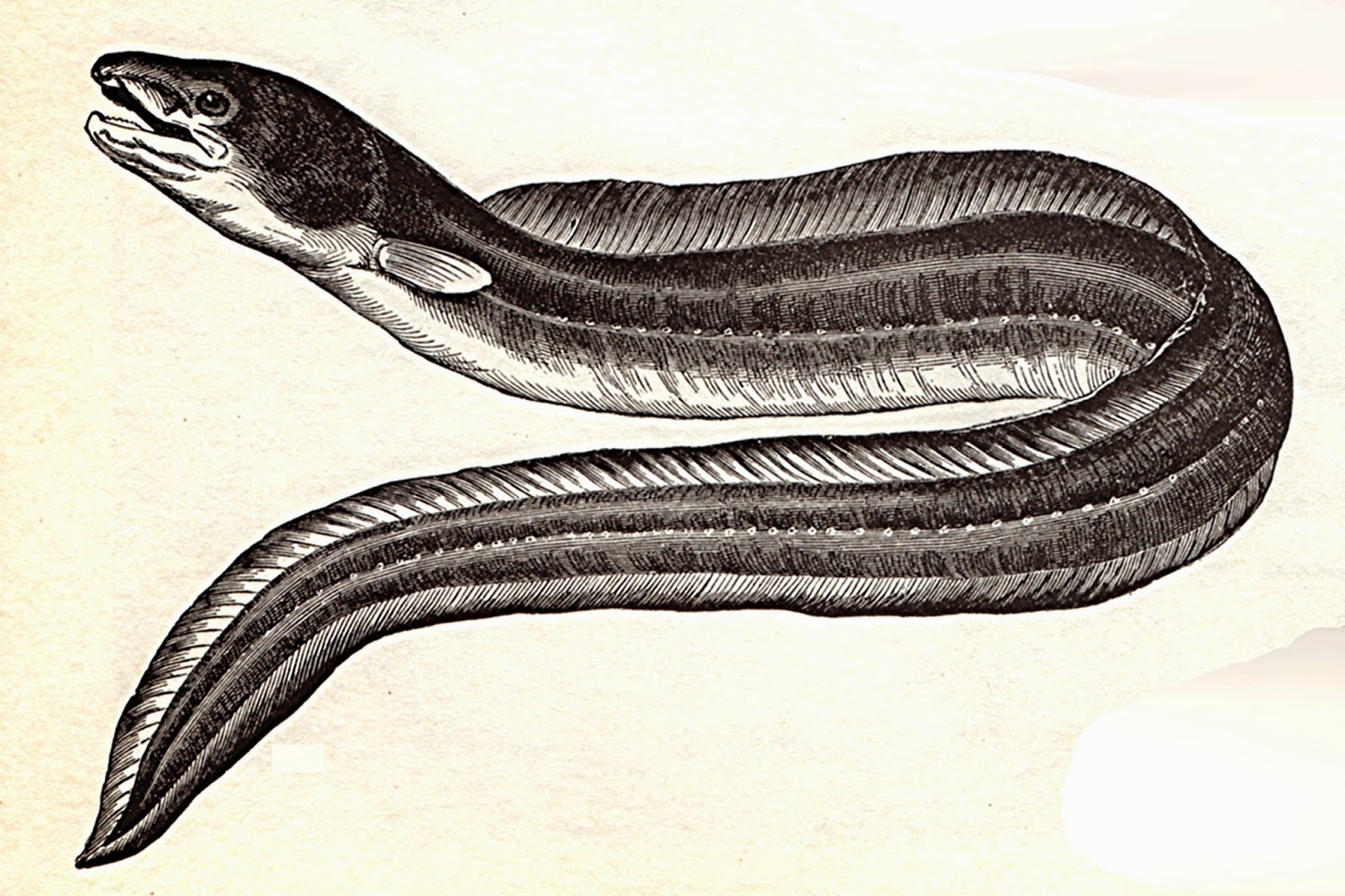

A famous example of this was the discovery (c.1886) that eels possess sexual organs i.e reproduced themselves in a “normal” manner by sexual coupling, a discovery consonant with Darwin’s view that fish reproduced sexually and were not worms reproducing through spontaneous generation, which was the view advocated by Aristotle (c 330 BC), two thousand years earlier. This 19th century discovery meant that statements which supported “spontaneous generation” as a mechanism for generating new life-forms were weakened to the point of extinction; such statements therefore joined the ranks of “factoids”, as part of dead-science. In short, spontaneous generation was not an option which could be summoned to account for the emergence of new species.

A famous example of this was the discovery (c.1886) that eels possess sexual organs i.e reproduced themselves in a “normal” manner by sexual coupling, a discovery consonant with Darwin’s view that fish reproduced sexually and were not worms reproducing through spontaneous generation, which was the view advocated by Aristotle (c 330 BC), two thousand years earlier. This 19th century discovery meant that statements which supported “spontaneous generation” as a mechanism for generating new life-forms were weakened to the point of extinction; such statements therefore joined the ranks of “factoids”, as part of dead-science. In short, spontaneous generation was not an option which could be summoned to account for the emergence of new species.

Here then is a model for the transition of statements which describe the world in empirically false terms and how, at some later stage, such statements are replaced by a new body of statements, by new knowledge. It is a complicated process which often meets fierce resistance that is by those who have been entrusted by their follow citizens to guard our hoard of “knowledge”, like the Nibelungen’s guardian dragons protecting the golden treasure of the Rhine. Old treasured tales do not pass gently into the night but fiercely resist such attempts to demystify them. It isn’t that some newer theory is found to be correct, but that finally someone convinced others of the error of their ways, and the old theory was discovered to be faulty perhaps even in several repeats. Spontaneous generation, the pre-Darwinian theory used to account for new species, was a plausible theory at the time these matter were first discussed – but in the end it was deemed seems to be inadequate and was therefore rejected by the scientific community as a whole, and by those we had entrusted to safe-keep our knowledge.

In summary, the challenge faced by biologists at the turn of the 19th century was to discover evidence which either would suffice to continue it to support an older theory of speciation (as sanctified by Aristotle two thousand years earlier) or to find evidence which contradicted the theory proposed by Darwin and others during there first half of the 19th century, that speciation was an ongoing (contemporary) process powered by a combination of mutations in cells (about which very little was known at the time) and the adaptation of such mutants to their ecological niche. These were different but complementary tasks: (1) find supporting evidence for two conflicting position about speciation and/or (2) find evidence which contradicts one, or both, of the theories proposed to explain the large variety of species observed and the source of their often small inter-species differences. At the time these matters were first debated when both microbiology and especially cell biology were in their infancy, and were still half a century away from the great breakthroughs of the late 1950s. The initial problems were set by conflicting theories which had been formulated when knowledge about these matters was sketchy and mostly conjectural. Historically, here was a case of how these problems were approached and resolved, step by step, often secretively, through empirical investigations.

But the history of our knowledge about the world also records many cases where solution were adopted ex-cathedra, that is, by declaring a solution to a problem which was primarily based on arguments from broadly defined first principles. If there was public disagreements about these, it was based on how well and perfectly deductions had been derived from the assumptions adopted. These first principles, as they came to be known, referred to assumptions which were not themselves directly challenged, but which were assumed to depict and reflect an existing state of affairs on the grounds that these were self-evident to the theorist (the person who mattered) or because these appeared the best ones (as in the most rational) available under the prevailing circumstances to the writer and his friends.

The most persuasive cases cited from the past of solutions which had been reached in this manner were the proofs of Euclidean geometry. These proofs had been available to the educated elite of historical periods who had assumed that space is best represented by a two-dimensional linear surface. Thus, all the conclusion reached by Euclid and his many successors over the next 2,000 years were deemed to hold when applied to what is basically a “flat earth” model of the earth: however, any conclusions drawn did not hold for spaces which were concave or convex, i.e., did not hold for the surface of globes. The assumptions that the earth is flat, that the earth is stationary, that celestial objects move relative to the earth, that the movement of celestial bodies are uninfluenced by their proximity to the earth, that light and sound travel through a medium and specifically that light travels in a straight trajectory etc., were not questioned until the end of the 19th century. When these older assumptions were challenged and exposed to experimental investigations, this change in approach also marked the end of solutions to problems which used the deductive approach.

Of course, deductions from first principles remained valid when done strictly according to a priori rules of logic, but the deduction themselves could not answer questions about what composed the universe to start with, or how things worked during “post-creation” periods! Such questions demanded that one demonstrated that any claim about a state of affairs had been independently demonstrated, that there was a correspondence between a state of affairs as perceived and what was being asserted about it. Under some conditions the meeting of two points in space does not make “sense” and therefore needed to be viewed as an “impossible act” !

Once it was accepted that empirical investigations could reveal new facts it opened the door to the (dangerous?) idea that old existing facts could be tarnished, even faulted, perhaps that new discoveries could be superior to old facts. To which old facts? All, or only to some? Those facts declared to be so were supported by the first layer of assumptions made. It was a dangerous idea.

The history of comets is a case in point. Comets had been reported for thousands of years by both Eastern and Western sky-watchers, but were thought to be aberrations from a pre-ordained order of things, which portended unusual events, like the birth and death of prominent people,(e.g. Ceasar’s death, Macbeth’s kingship, Caliban’s fate — Shakespeare was, as is well known, well-versed in the Occult, as were many in his audience). But where did comets come from, and how did they travel through the (layered) sky? What propelled them and for what goodly reasons did they come menacingly across the sky ? It required special agents to interpret such rare public events. These were furthermore more likely to be messengers from the gods, and were therefore inspired seers and were believed (before the advent of Christianity) to be equipped to find answers to especially difficut questions! Thus if one assumed (as was common for thousands of years) that celestial bodies travelled around the earth on fixed translucent platforms – perhaps on impenetrable crystalline discs, each of which was “nailed” permanently to an opaque or translucent wall in the sky – this belief opened a number of possibilities to previously unanswered questions. (We create worlds for ourselves which make it possible to answer questions which bother us!)

The history of comets is a case in point. Comets had been reported for thousands of years by both Eastern and Western sky-watchers, but were thought to be aberrations from a pre-ordained order of things, which portended unusual events, like the birth and death of prominent people,(e.g. Ceasar’s death, Macbeth’s kingship, Caliban’s fate — Shakespeare was, as is well known, well-versed in the Occult, as were many in his audience). But where did comets come from, and how did they travel through the (layered) sky? What propelled them and for what goodly reasons did they come menacingly across the sky ? It required special agents to interpret such rare public events. These were furthermore more likely to be messengers from the gods, and were therefore inspired seers and were believed (before the advent of Christianity) to be equipped to find answers to especially difficut questions! Thus if one assumed (as was common for thousands of years) that celestial bodies travelled around the earth on fixed translucent platforms – perhaps on impenetrable crystalline discs, each of which was “nailed” permanently to an opaque or translucent wall in the sky – this belief opened a number of possibilities to previously unanswered questions. (We create worlds for ourselves which make it possible to answer questions which bother us!)

There were other assumptions involved, as for example the assumption that whoever created the world (the great mover, as assumed by some early Greek philosophers) who must also have created everything perceivable in accordance with a perfect plan which employed perfect forms, e.g., perfect geometric forms and patterns. Such assumptions had to be jettisoned before one could consider alternatives which dispensed with the notion (a) that perfect forms existed since even before the world did ab initio or (b) that anything imperfect would necessarily refer to an illusion, a distortion, aberration and was therefore itself unnatural! Comets, according to ancient astronomer or priests and others, were not to be viewed as natural phenomena, but were viewed as unnatural, abberations, beyond what super-intelligent entitites would do, a species which could intervene in the normal, divine order of things! Thus our ancestors provided for the possibility that a construction could arise which was built by following imperfect rules of construction and which could by virtue of this also implode unexpectedly!

The last paragraph illustrates graphically what I have tagged as ex-cathedra procedures and has demonstrated how a naturalistic philosophy, which was based on assumptions that knowledge attained by empirical discoveries was inherently superior to knowledge derived or deduced from first principles. The issue is to justify why any choice would be superior in effect to its alternatives: it has remained a casus belli between different factions of metaphysicians for two and half thousand years – perhaps even longer. We have of course few records, if any, which would support either position wholly or fully. This may perhaps change in future as we inreasingly and assiduously store all earlier findings and speculations regardless of how well supported, and before we undertake the awesome task of assessing each on the strenght of its merits. There are no ultimate judges as far as we know who can undertake this task and also assume responsibilty for any final recommendations they may make!

We are, in fact, demonstrably more adept at describing the outside world — our common world. Some say that this is so because we live in a materialistic culture which is primarily focussed on the world around us. (Thus culture plays a “shaping” role).

We are, in fact, demonstrably more adept at describing the outside world — our common world. Some say that this is so because we live in a materialistic culture which is primarily focussed on the world around us. (Thus culture plays a “shaping” role).

How does one inform another person what “the Jabberwock” refers to? No real definition of this creature appears in the poem in Alice through the Looking Glass. However, we have an image of it from an illustration in the book, that is, we have admittedly only a vague concept of it which we share with all other lovers of the Alice books and its fabulous unique menagerie of characters.

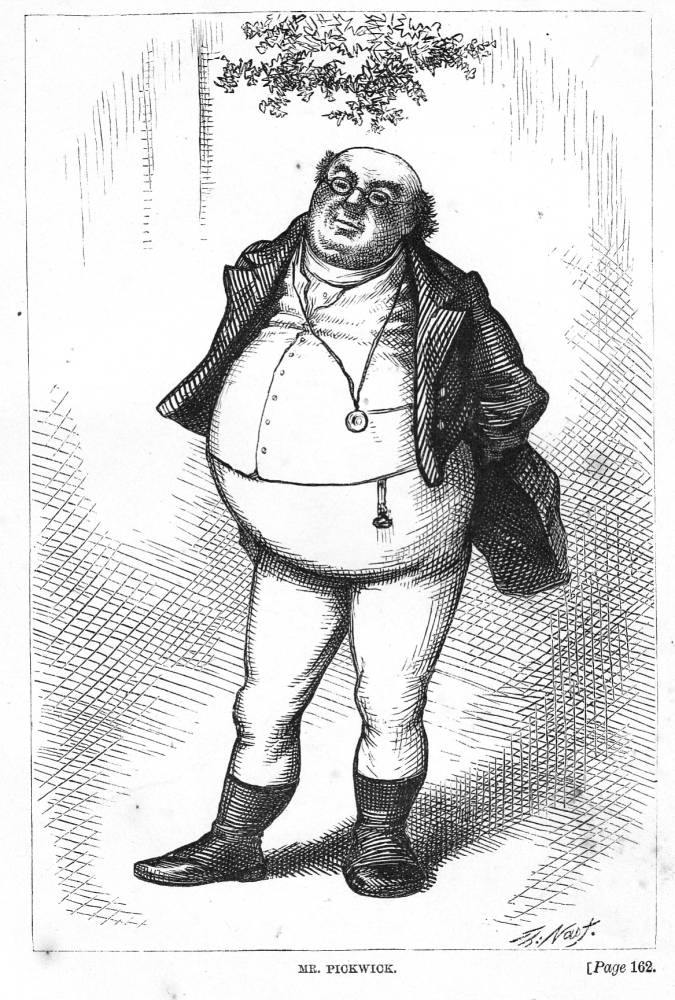

How does one inform another person what “the Jabberwock” refers to? No real definition of this creature appears in the poem in Alice through the Looking Glass. However, we have an image of it from an illustration in the book, that is, we have admittedly only a vague concept of it which we share with all other lovers of the Alice books and its fabulous unique menagerie of characters. To refer to someone as “Pickwickian” also illustrates this point. Mr. Pickwick is a character described in loving detail by Charles Dickens. He has since become a universal image for a certain kind of 19th century person of the English middle class. He cannot be described well or adequately by a single, or even by a small set of sentences, but in a sense he “emerges” through acquaintance with the many descriptions given of him throughout the novel bearing his name. He has become what Freudians called an “imago”, a prototype based strongly on unconscious factors.

To refer to someone as “Pickwickian” also illustrates this point. Mr. Pickwick is a character described in loving detail by Charles Dickens. He has since become a universal image for a certain kind of 19th century person of the English middle class. He cannot be described well or adequately by a single, or even by a small set of sentences, but in a sense he “emerges” through acquaintance with the many descriptions given of him throughout the novel bearing his name. He has become what Freudians called an “imago”, a prototype based strongly on unconscious factors. Clearly whenever a language hosts or imports words and phrases, that language grows, regardless of whether its new terms are derived from a foreign language or whether these are “home made”, that is, are invented by its current speakers! Modern American English is full of such “home-grown” words and phrases. The word *rap* for example has several meanings including the act of talking or discussing, freely, openly, or volubly (as suggested in Wikipedia). It is also related to the idea of establishing rapport, that is, developing a sense of fellowship among speakers and members of a group. These group members will then determine which of several meanings also apply! It is a case where the meaning of a term will be strongly influenced by its context, not only by its dictionary definition(s). In short, the meaning of a word is usually — perhaps, most often — multi-determined. Thus, to search for a unique meaning is generally speaking, foolhardy.

Clearly whenever a language hosts or imports words and phrases, that language grows, regardless of whether its new terms are derived from a foreign language or whether these are “home made”, that is, are invented by its current speakers! Modern American English is full of such “home-grown” words and phrases. The word *rap* for example has several meanings including the act of talking or discussing, freely, openly, or volubly (as suggested in Wikipedia). It is also related to the idea of establishing rapport, that is, developing a sense of fellowship among speakers and members of a group. These group members will then determine which of several meanings also apply! It is a case where the meaning of a term will be strongly influenced by its context, not only by its dictionary definition(s). In short, the meaning of a word is usually — perhaps, most often — multi-determined. Thus, to search for a unique meaning is generally speaking, foolhardy. The underlying principle was already voiced and practiced with consummate and diabolical skill by Josef Goebbels, the infamous henchman of Hitler during the long nights of 1933-1945. His mantra can be expressed as follows:

The underlying principle was already voiced and practiced with consummate and diabolical skill by Josef Goebbels, the infamous henchman of Hitler during the long nights of 1933-1945. His mantra can be expressed as follows: One understands the present inevitably in terms of the past, that is, one has to know about the critical, salient errors made in the past since, as far as I know, there is no error-less learning, no future science without a past science whose paths were studded with pot-holes and major diversions into the unknown. Humans may stumble but many find a path that leads to somewhere.

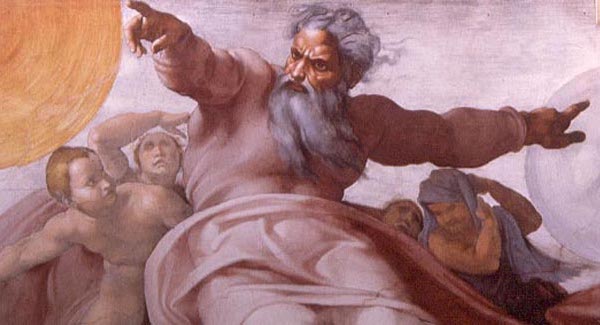

One understands the present inevitably in terms of the past, that is, one has to know about the critical, salient errors made in the past since, as far as I know, there is no error-less learning, no future science without a past science whose paths were studded with pot-holes and major diversions into the unknown. Humans may stumble but many find a path that leads to somewhere. A particular version of this deistic belief claims that their god not only programmed and planned the universe as we have come to know it so far, but that their deity also has plans to dismantle it at some time in the future. It is part of an apocalyptic vision — which is not shared by all deists — and is distinctly Jabberwockian, as previously defined. So it is argued that there was a beginning, perhaps a prelude to the show, there will also be an end-play, a Gotterdamerung. The “gods” will move out and find a better playing field but before they do so they will curse our world. Something to look forward to, especially when “all those arms and legs and heads chopped off in a battle, shall join together in the latter day” (Henry V Act IV, Scene 1) — a happy end game for some and perdition for most others.

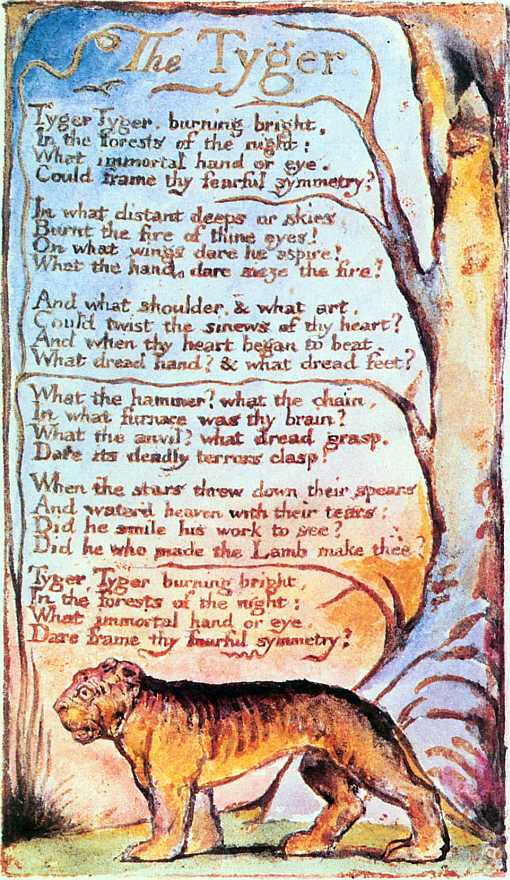

A particular version of this deistic belief claims that their god not only programmed and planned the universe as we have come to know it so far, but that their deity also has plans to dismantle it at some time in the future. It is part of an apocalyptic vision — which is not shared by all deists — and is distinctly Jabberwockian, as previously defined. So it is argued that there was a beginning, perhaps a prelude to the show, there will also be an end-play, a Gotterdamerung. The “gods” will move out and find a better playing field but before they do so they will curse our world. Something to look forward to, especially when “all those arms and legs and heads chopped off in a battle, shall join together in the latter day” (Henry V Act IV, Scene 1) — a happy end game for some and perdition for most others. To give an example: the Jabberwocky, described by Lewis Carrol in Alice through the Looking Glass, is an imagined creature that has no counterpart in our normal world although it is kith and kin to other imagined creatures of the “monster class”. Compare this to a predatory tiger which stalks the open fields in search of a prey. This tiger is not described by “Tyger Tyger, burning bright” but can be described in many ways in ordinary language. We can therefore talk about an AJ creature — short for Anti-Jabberwockians — as well as a J creature, which may have “eyes of flame,” whiffles as it moves and “burbles” too. It had a neck! The rest is left to our imagination, stimulated by the sounds of a set of non-descriptive words. Such creatures only exist in our minds, in our imagination and have no counterparts in nature. Don’t look for them! One can add to their attributes, but we do not expect that the same additional attributes will be discovered by other people as they roam through their imaginations.

To give an example: the Jabberwocky, described by Lewis Carrol in Alice through the Looking Glass, is an imagined creature that has no counterpart in our normal world although it is kith and kin to other imagined creatures of the “monster class”. Compare this to a predatory tiger which stalks the open fields in search of a prey. This tiger is not described by “Tyger Tyger, burning bright” but can be described in many ways in ordinary language. We can therefore talk about an AJ creature — short for Anti-Jabberwockians — as well as a J creature, which may have “eyes of flame,” whiffles as it moves and “burbles” too. It had a neck! The rest is left to our imagination, stimulated by the sounds of a set of non-descriptive words. Such creatures only exist in our minds, in our imagination and have no counterparts in nature. Don’t look for them! One can add to their attributes, but we do not expect that the same additional attributes will be discovered by other people as they roam through their imaginations. The J’s therefore believe in the (real) possible existence of creatures of their imagination, just as they believe in their own existence (I think therefore I am) but also in the reality of butterflies. The follow-up question is, “What creatures in their imagination do not exist and are therefore imaginary?” This question demands that we submit a set of criteria which demarcates creatures that are officially real from those deemed non-real. By what standards can these two classes of creatures be reliably identified?

The J’s therefore believe in the (real) possible existence of creatures of their imagination, just as they believe in their own existence (I think therefore I am) but also in the reality of butterflies. The follow-up question is, “What creatures in their imagination do not exist and are therefore imaginary?” This question demands that we submit a set of criteria which demarcates creatures that are officially real from those deemed non-real. By what standards can these two classes of creatures be reliably identified? I interpret this to mean that anything claimed “to be” also exists. Note that terms like *entity*, *substance*, and *object* were already used by Greek writers (before 450 BC) and continued to be used as a vital part of the vocabulary by philosophers from then until the present day. Of course the meaning has changed over time. However our uninterrupted use of the term indicates that we are able to make good use of the it even when its meaning (as in interpretation) changes. As with so many words meaning and interpretations change often, even imperceptibly, throughout the past hundreds of years.

I interpret this to mean that anything claimed “to be” also exists. Note that terms like *entity*, *substance*, and *object* were already used by Greek writers (before 450 BC) and continued to be used as a vital part of the vocabulary by philosophers from then until the present day. Of course the meaning has changed over time. However our uninterrupted use of the term indicates that we are able to make good use of the it even when its meaning (as in interpretation) changes. As with so many words meaning and interpretations change often, even imperceptibly, throughout the past hundreds of years. An early example of such a challenge occurred in approximately 550 BC when several Ionian thinkers suggested that the physical world — the world of physical objects and their interactions — had been constructed by unknown forces from one or more basic fundamental materials, i.e. atoms, the indivisibles. Two factors were speculated: one, material objects in their most fundamental, most primitive forms; and two, the manner by which these were structurally related. The former (*atoms*) were not considered by Greek thinkers to be pliable, whereas *forms* or *structures*, it was assumed, were. It was not clear by what rules these “atoms” has been assembled to become new entities, new phenomena, but it was assumed that knowledge about how this happened could ultimately be gained — although not how this knowledge could be gathered.

An early example of such a challenge occurred in approximately 550 BC when several Ionian thinkers suggested that the physical world — the world of physical objects and their interactions — had been constructed by unknown forces from one or more basic fundamental materials, i.e. atoms, the indivisibles. Two factors were speculated: one, material objects in their most fundamental, most primitive forms; and two, the manner by which these were structurally related. The former (*atoms*) were not considered by Greek thinkers to be pliable, whereas *forms* or *structures*, it was assumed, were. It was not clear by what rules these “atoms” has been assembled to become new entities, new phenomena, but it was assumed that knowledge about how this happened could ultimately be gained — although not how this knowledge could be gathered.